Interpretability and explainability are crucial for safety-critical AI systems because:

- They enable verification of system behavior and identification of potential risks

- They facilitate compliance with safety regulations and standards

- They help build trust with stakeholders and end-users

- They support effective debugging and improvement of AI systems

- They allow for better integration of human oversight in AI-driven processes

In our journey through machine learning models for house price prediction, we’ve explored various algorithms and techniques to improve their performance.

Now, let’s delve into a crucial aspect of machine learning that often determines the real-world applicability of our models: Interpretability and Explainability.

Contents

- Understanding Interpretability and Explainability

- Importance in Machine Learning

- Techniques for Model Interpretation

- Tools for Explainable AI

- Case Study: Interpreting Our House Price Prediction Models

- Best Practices and Considerations

- Conclusion

You can find the complete code for this data cleaning process in my GitHub repository.

1. Understanding Interpretability and Explainability

Interpretability refers to the degree to which a human can understand the cause of a decision made by a machine learning model. Explainability goes a step further, involving the detailed explanation of the internal mechanics of a model in human terms.

In simple terms:

- Interpretability: How the model works at a high level.

- Explainability: Detailed explanations for specific predictions.

2. Importance in Machine Learning

Why do interpretability and explainability matter?

✓ Trust: Stakeholders trust models they can understand.

✓ Regulatory Compliance: Certain industries require explainable models for legal reasons.

✓ Debugging: Understanding model behavior helps in identifying and fixing errors.

✓ Fairness: Interpretable models can be audited for bias and discrimination.

✓ Scientific Understanding: Explainable models can lead to new insights in research.

3. Techniques for Model Interpretation

Here are some common techniques for interpreting machine learning models:

Feature Importance: Measures the influence of each feature on the model’s predictions, helping to identify which features drive decisions.

Partial Dependence Plots (PDPs): Visualize the relationship between a specific feature and the predicted outcome, helping to understand feature interactions.

SHAP Values: Quantify the contribution of each feature to a prediction, providing both global and local explanations.

LIME: Explains individual predictions by approximating the model locally with a simpler, interpretable model.

4. Tools for Explainable AI

Ensuring that AI models are interpretable is crucial for building trust and understanding their decisions. Several powerful tools have been developed to help with model interpretation:

SHAP: SHapley Additive exPlanations (SHAP) values offer a unified framework for understanding feature importance across various models. SHAP explains how each feature contributes to specific predictions, making complex models more interpretable.

LIME: Local Interpretable Model-agnostic Explanations (LIME) explains individual predictions by approximating the original model with a simpler, interpretable one. This tool is particularly useful for gaining insights into black-box models.

ELI5: A Python library for debugging classifiers and explaining predictions. ELI5 provides clear visualizations that show how features influence outcomes, enhancing model transparency.

InterpretML: Microsoft’s InterpretML toolkit supports both inherently interpretable models and black-box explainers, offering insights into feature importance and model behavior in an accessible way.

5. Case Study: Interpreting Our House Price Prediction Models

In this case study, we explore how various machine learning interpretation techniques can be applied to understand a Random Forest model trained to predict house prices.

To ensure a comprehensive understanding of the model’s decision-making process, we utilized SHAP LIME, and ELI5.

Here’s how these tools provided insights into our model.

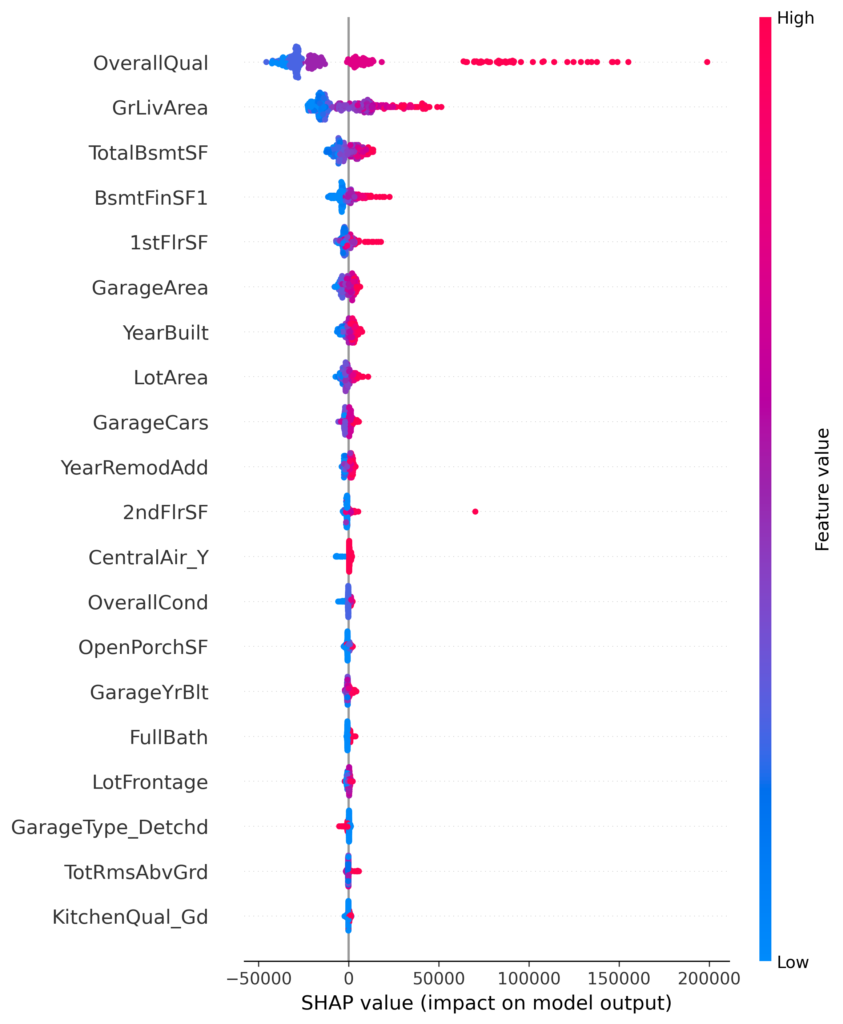

SHAP (SHapley Additive exPlanations) Analysis

The color gradient in the plot (from blue to red) indicates the feature’s value, where red represents higher feature values. For instance, higher “OverallQual” and “GrLivArea” lead to higher house price predictions.

OverallQual: The SHAP summary plot shows that “OverallQual” is the most influential feature in predicting house prices, with higher quality leading to an increase in predicted house price. This feature has the most substantial impact, as evidenced by the broad distribution of SHAP values.

GrLivArea: “GrLivArea” (above-ground living area) is another critical feature, with larger living areas generally increasing the house price prediction.

TotalBsmtSF: The total basement square footage is also a significant contributor, with larger basements correlating positively with higher house prices.

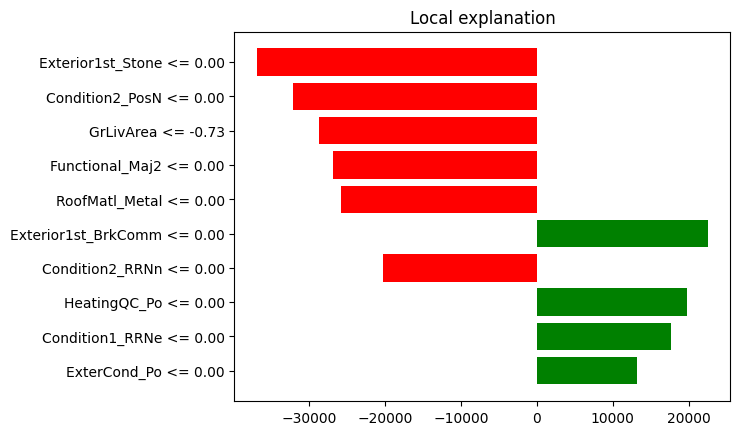

LIME (Local Interpretable Model-agnostic Explanations) Analysis

The LIME explanation provides a local interpretation for a specific instance (house). The plot indicates which features contribute positively (in green) and negatively (in red) to the model’s prediction.

Exterior1st_Stone: This feature contributes the most negatively to the prediction for this particular instance, suggesting that having stone as the primary exterior material reduces the predicted house price significantly for this instance.

Condition2_RRNn and HeatingQC_Po: These features have a positive impact, indicating that the specific conditions related to them in this house instance are favorable for a higher price prediction.

This local explanation helps us understand why the model made a particular prediction for an individual house.

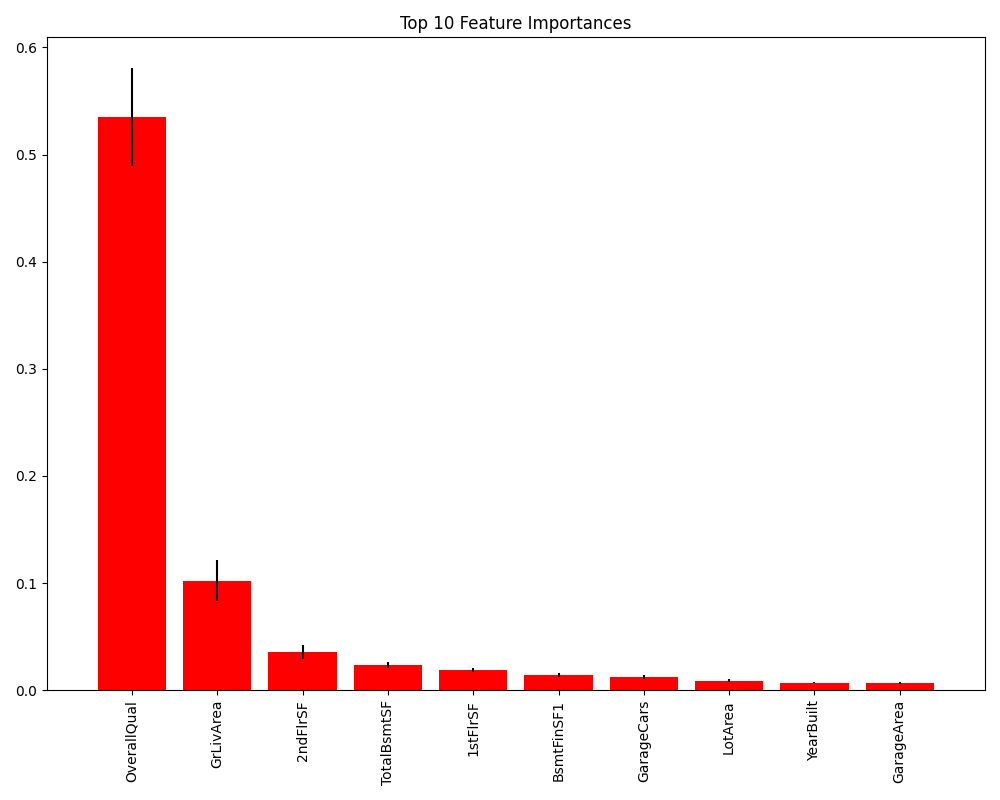

ELI5 Top 10 Feature Importance

The ELI5 plot ranks the top 10 most important features used by the Random Forest model.

OverallQual is by far the most important feature, which aligns with the SHAP summary plot. This suggests that the overall material and finish quality is the primary determinant of house price in the dataset.

GrLivArea and 2ndFlrSF (second floor square footage) are also significant contributors, indicating that larger living spaces are generally associated with higher house prices.

Other important features include TotalBsmtSF, BsmtFinSF1 (type 1 finished square footage in the basement), and GarageCars. These features emphasize the importance of the size and quality of the house’s interior spaces in determining its price.

6. Best Practices and Considerations

Start Simple

Begin with interpretable models like linear regression or decision trees. These models offer clear insights into feature impact and help build a strong understanding before advancing to more complex models.

Use Multiple Techniques

Different interpretation methods, such as SHAP, LIME, and permutation importance, provide complementary insights. Combining these techniques gives a broader understanding of how your model makes decisions.

Consider the Audience

Tailor your explanations to the technical expertise of your audience. Use detailed explanations for technical stakeholders, but simplify and focus on key takeaways for non-technical ones, using visuals or analogies.

Be Aware of Limitations

Each interpretation method has its own assumptions and limitations. Understanding these helps avoid over-reliance on a single method and ensures a balanced approach to model interpretation.

Combine with Domain Knowledge

Pair model interpretations with expert domain knowledge. This ensures that the insights make sense in a real-world context and enhances the practical value of your interpretations.

Following these practices will improve the clarity, reliability, and usefulness of your model interpretations.

7. Conclusion

In this post, we’ve explored the importance of interpretability and explainability in machine learning. By applying these techniques to our house price prediction models, we gained valuable insights into how our models make decisions.

Understanding model behavior not only builds trust and ensures compliance but also opens doors to model improvement and scientific discovery. In real-world scenarios, a slightly less accurate but fully interpretable model might be more valuable than a highly accurate but unexplainable one.

As data scientists, it’s our responsibility to bridge the gap between complex algorithms and human understanding.