In this analysis, I explore the process of predicting e-commerce sales using Python, leveraging data from the Google Analytics Sample Dataset in BigQuery.

While BigQuery ML provides a powerful and scalable platform for building machine learning models, Python offers more flexibility and control, which can be particularly beneficial when working with complex data processing and custom model development.

However, handling large datasets in Python presents unique challenges, especially in terms of memory management and processing efficiency.

You can find the complete code in my GitHub repository.

Contents

- Results of BigQuery ML Analysis as Motivation

- Data Preparation

- Feature Engineering

- Random Forest Model

- Results

- Conclusion

1. Results of BigQuery ML Analysis as Motivation

The initial BigQuery ML analysis provided a solid foundation for understanding the predictive capabilities of models like Logistic Regression, Random Forest, and XGBoost.

While BigQuery ML’s scalability and SQL integration enabled rapid model development, limitations in model complexity and feature engineering prompted further exploration using Python.

Upon expanding the feature set, we observed near-perfect performance across all models:

| Model | AUC | Precision | Recall | Accuracy | F1 Score |

| Logistic Regression | 1.00 | 0.99 | 0.99 | 1.00 | 0.99 |

| Random Forest Classifier | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| XGBoost | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

The perfect precision, recall, and F1 scores achieved by Random Forest and XGBoost highlight their ability to accurately classify sales events without errors.

This significant improvement underscores the value of comprehensive feature engineering and the power of ensemble methods like Random Forest in managing complex datasets. Given its robustness and interpretability, Random Forest was chosen as the optimal model for predicting e-commerce sales in this analysis.

2. Data Preparation

Working with large datasets in Python presents significant challenges, especially regarding memory usage and processing time. The Google Analytics Sample Dataset, with millions of rows, requires careful handling to avoid memory overload and ensure efficient processing.

1) Chunked Data Processing

Instead of loading the entire dataset into memory at once, I processed the data in smaller chunks.

By fetching and processing data in one-day increments, I reduced the memory footprint and allowed for more manageable processing.

2) Data Cleaning and Flattening

The raw data contains nested and JSON-like structures that need to be flattened for easier analysis.

This process is memory-intensive, so it was important to clean and flatten the data in chunks, saving the intermediate results to disk.

Imputation and Scaling

To prepare the data for modeling, I applied imputation to handle missing values and scaling to normalize the features.

These steps were also performed in chunks to manage memory usage.

3. Feature engineering

Feature engineering is a critical step in preparing the dataset for modeling. In this analysis, I derived new features that capture time-based patterns, user engagement, device type, traffic source, and geographical information.

High Dimensionality: The dataset contains many categorical features that, when encoded, can lead to high dimensionality. This increases the computational burden and can negatively impact model performance. To address this, I carefully selected and engineered features that are most likely to influence sales predictions.

Feature Interactions: Capturing interactions between features (e.g., between traffic source and device type) can be crucial for model performance. In Python, I used custom feature engineering techniques to create these interactions.

# Example of feature engineering

df_engineered = engineer_features(df_cleaned)

# Optional: Save the engineered features for later use

df_engineered.to_csv('engineered_features.csv', index=False)4. Random Forest Model

To predict e-commerce sales, I selected the Random Forest Regressor due to its robustness and ability to handle high-dimensional data with minimal preprocessing. The model was trained on the engineered features, and its performance was evaluated using standard regression metrics.

Feature Selection: By carefully selecting features that have the most predictive power, I reduced the model’s complexity and training time.

Parallel Processing: Random Forest models can be trained in parallel, which speeds up the training process, especially when dealing with large datasets.

5. Results

The sales prediction analysis using a Random Forest Regressor yielded highly accurate results, as indicated by the evaluation metrics. Here’s a summary of the key findings:

Model Performance:

- Mean Squared Error (MSE): The model achieved a very low MSE of

6.6751e-05, indicating that the average squared difference between the actual and predicted sales values is minimal. - Root Mean Squared Error (RMSE): The RMSE, which represents the standard deviation of the prediction errors, was also very low at

0.00817. This suggests that the model’s predictions are close to the actual sales values. - Mean Absolute Error (MAE): The MAE, representing the average absolute difference between actual and predicted sales, was

7.26e-05, further confirming the model’s accuracy. - R-squared (R²) Score: The model achieved an impressive R² score of

0.99988, indicating that nearly all of the variance in the sales data is explained by the model.

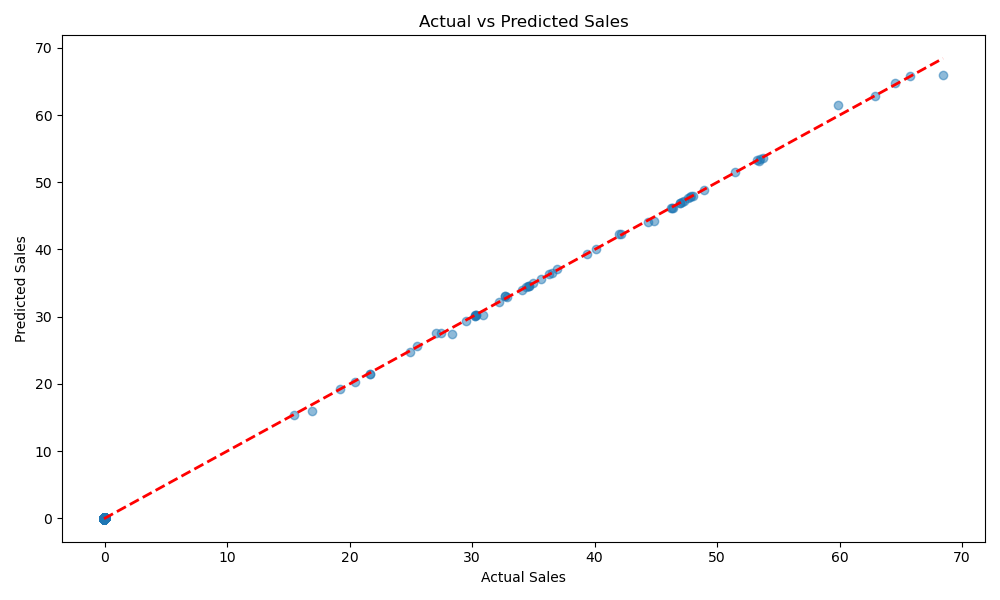

Visualization

We generated an Actual vs. Predicted Sales scatter plot, showing a near-perfect alignment between the actual and predicted values, further illustrating the model’s accuracy.

6. Conclusion

In this analysis, I demonstrated the process of predicting e-commerce sales using Python, building upon the foundation established by an initial exploration with BigQuery ML.

While BigQuery ML offered rapid model development and scalability within a SQL-based environment, Python provided the flexibility and control necessary for more advanced data processing and custom model development.

Scalability and Speed:

BigQuery ML excels at handling large datasets directly within the data warehouse, allowing for quick iteration and evaluation of machine learning models without the need to export data to external platforms.

Ease of Use:

Its seamless integration with SQL makes it accessible to data analysts familiar with SQL, streamlining the process of model creation and evaluation.

Rapid Prototyping:

BigQuery ML is ideal for rapidly developing models and gaining initial insights, especially when working within the Google Cloud ecosystem.

Limited Customization:

BigQuery ML offers less flexibility in feature engineering and model customization compared to Python, making it challenging to implement complex models or tailor the modeling process to specific needs.

Model Complexity Constraints:

The platform has limitations on model complexity and size, which can hinder the development of highly sophisticated models, particularly when working with large feature sets or complex data interactions.

Flexibility and Control:

Python provides unparalleled flexibility in data processing, feature engineering, and model development. It allows for the implementation of advanced techniques and custom workflows tailored to specific data and business requirements.

Advanced Feature Engineering:

Python enables the creation of complex features and interactions, which can significantly enhance model performance, particularly in cases where the relationships within the data are intricate.

Robust Model Selection:

With Python, I could choose and fine-tune a Random Forest Regressor, a model well-suited for handling high-dimensional data and providing interpretability, ultimately leading to highly accurate sales predictions.

Memory Management:

Handling large datasets in Python can be challenging, particularly in terms of memory usage and processing efficiency. Careful management and optimization strategies, such as chunked data processing, are essential to prevent memory overload.

Processing Time:

Python may require longer processing times for large-scale data operations, especially when compared to the optimized infrastructure provided by BigQuery ML.

Final Thoughts

The combined use of BigQuery ML and Python allowed for a comprehensive approach to e-commerce sales prediction, leveraging the strengths of both platforms.

BigQuery ML provided a quick and scalable environment for initial exploration, while Python enabled deeper analysis and refinement, resulting in a robust and highly accurate predictive model.

This approach highlights the importance of selecting the right tools for different stages of data analysis, balancing the need for speed, flexibility, and precision when working with big data.

.