As generative AI models become increasingly powerful and ubiquitous, the need for transparency and explainability in these systems has never been more critical.

These concepts are essential for building trust, ensuring ethical use, and complying with emerging regulations.

In this post, we will explore the importance of transparency and explainability in generative AI, discuss challenges, and provide real-world examples. Additionally, we will present Python code snippets to demonstrate practical techniques for improving transparency and explainability in AI models.

Understanding Transparency and Explainability

Transparency refers to the openness about how an AI system works, its limitations, and the data it was trained on.

Explainability involves providing clear, understandable reasons for an AI system’s outputs or decisions.

In generative AI, these concepts help users understand why a model produced a particular output and how reliable that output might be.

Building Trust: Users are more likely to trust and adopt AI systems they can understand.

Ethical Considerations: Transparency helps identify and address biases or unfair outcomes.

Regulatory Compliance: Many emerging AI regulations require some level of explainability.

Debugging and Improvement: Understanding model behavior aids in refining and enhancing AI systems.

User Empowerment: Explainable AI allows users to make informed decisions about when and how to use AI-generated content.

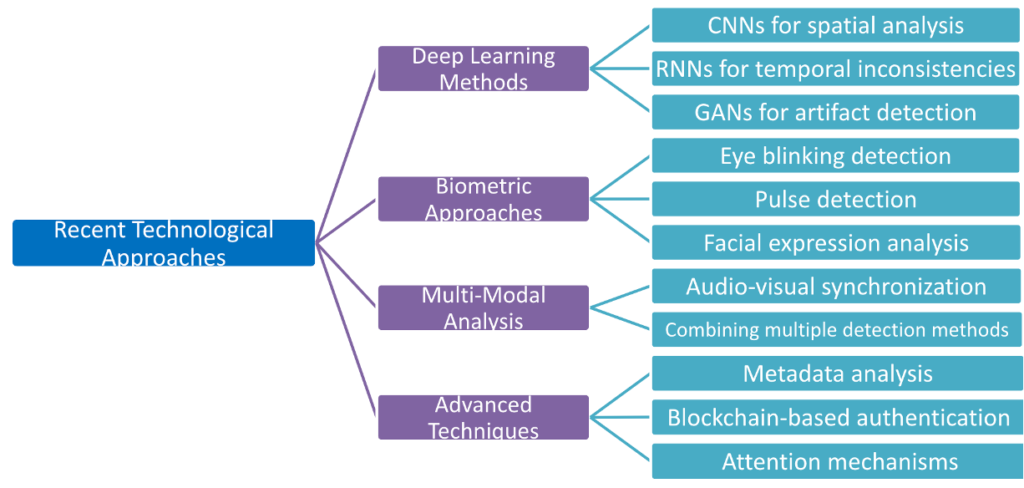

Recent Technologies for Transparency and Explainability

1. Attention Mechanisms

- Examples: Simulated attention weights, attention map visualizations.

- Explanation: Attention mechanisms highlight which parts of the input data the model focuses on during generation. This offers insights into how the model prioritizes different elements in text or images, especially in transformer-based models like GPT.

2. Feature and Token-Level Attribution

- Examples: SHAP for language models, Integrated Gradients, LIME.

- Explanation: These methods explain the contribution of individual features (e.g., tokens or pixels) to the final output. By attributing specific parts of the input to the generated result, they make the model’s decision-making process more interpretable.

3. Latent Space and Model Dissection

- Examples: Principal Component Analysis (PCA) for latent space, GAN dissection, StyleGAN layer analysis.

- Explanation: Latent space exploration and model dissection techniques help visualize and understand how internal representations in generative models contribute to the generated content.

4. Counterfactual Explanations and What-If Analysis

- Examples: Modifying inputs to see how outputs change, contrastive explanations.

- Explanation: Counterfactual methods allow users to explore how small changes to the input affect the generated output, providing transparency into the model’s decision boundaries and helping explain why certain outputs were generated instead of others.

5. Model Documentation and Ethical Frameworks

- Examples: Model Cards, Datasheets for Datasets, bias detection in generative AI.

- Explanation: Documenting models and datasets (e.g., Model Cards, Datasheets) is essential for transparency and accountability. These frameworks ensure that AI systems are designed with fairness, bias detection, and ethical governance in mind.

Real-World Examples

Scenario-Based Design for Explainability

A notable example of transparency and explainability in generative AI comes from the study “Investigating Explainability of Generative AI for Code through Scenario-based Design“ by Jiao Sun and colleagues. The researchers explored the explainability needs of software engineers using AI tools for code translation, autocompletion, and natural language to code conversion.

Through participatory workshops with 43 engineers, the study identified key user concerns such as understanding AI capabilities, the reasoning behind outputs, and how to influence AI behavior.

To address these needs, the researchers proposed practical features like:

- AI Documentation: Providing detailed information about the AI model, including training data and limitations.

- Uncertainty Indicators: Highlighting areas of low confidence to guide user attention.

- Attention Visualizations: Showing which input parts the AI focused on to demystify its decision-making.

- Social Transparency: Sharing other users’ interactions with the AI to foster collaboration and best practices.

This study exemplifies how user-centered design can enhance transparency and trust in generative AI, making these tools more accessible and reliable for engineers and other end users.

DeepExplain’s Transparent Approach to DeepFake Detection

Generative AI technologies like DeepFakes offer both impressive capabilities and significant challenges, particularly in maintaining information integrity and public trust. The paper “DeepExplain: Enhancing DeepFake Detection Through Transparent and Explainable AI” addresses these challenges by introducing the DeepExplain model—a sophisticated detection framework that combines CNNs and LSTM networks to accurately identify DeepFakes.

What sets DeepExplain apart is its emphasis on transparency and explainability. Using techniques like Gradient-weighted Class Activation Mapping (Grad-CAM) and SHapley Additive exPlanations (SHAP) values, DeepExplain makes the decision-making process of AI models more transparent.

Grad-CAM visualizes the specific video regions influencing the AI’s predictions, while SHAP values break down how each input feature contributes to the final decision.

This focus on transparency is vital in DeepFake detection, where the consequences of errors can be severe.

By offering clear insights into the AI’s reasoning, DeepExplain not only enhances detection accuracy but also builds trust by making the process understandable. This approach highlights the importance of designing AI systems that are both powerful and accountable, ensuring their ethical deployment in society.

Python Code Example

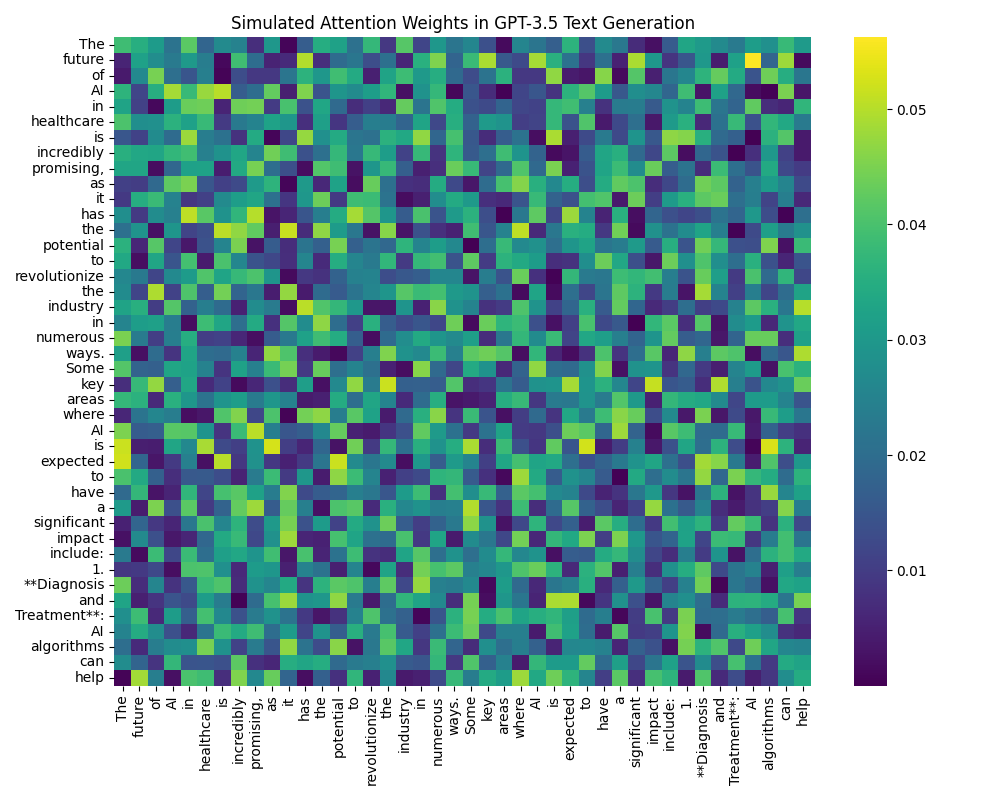

Simulated Attention Weights in GPT-3.5 Text Generation

In this section, I explore the use of simulated attention weights in text generation with GPT-3.5.

While GPT-3.5 does not inherently provide attention weights in the same way some other models do, simulating attention can still offer valuable insights into how different words might influence each other during text generation.

This method can help us better understand the model’s behavior and the relationships between the words it generates.

Methodology

Using GPT-3.5, I generated a piece of text based on the prompt, “The future of AI in healthcare.” To simulate the attention mechanism, I generated random attention weights that mimic the concept of attention in transformer models. These simulated weights were then used to visualize the interaction between words in the generated text.

Below is the Python code used for this experiment:

import os

from getpass import getpass

from openai import OpenAI

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Securely get the API key

api_key = os.environ.get("OPENAI_API_KEY")

if api_key is None:

api_key = getpass("Please enter your OpenAI API key: ")

# Initialize OpenAI client

client = OpenAI(api_key=api_key)

def generate_text_with_simulated_attention(prompt, max_tokens=50):

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

],

max_tokens=max_tokens

)

generated_text = response.choices[0].message.content

# Simulate attention weights (this is not real attention data from GPT-3.5)

words = generated_text.split()

attention = np.random.rand(len(words), len(words))

attention /= attention.sum(axis=1, keepdims=True)

return generated_text, attention, words

# Generate text and visualize simulated attention

prompt = "The future of AI in healthcare"

generated_text, attention, words = generate_text_with_simulated_attention(prompt)

# Visualize attention weights

plt.figure(figsize=(10, 8))

sns.heatmap(attention, cmap="viridis", xticklabels=words, yticklabels=words)

plt.title("Simulated Attention Weights in GPT-3.5 Text Generation")

plt.tight_layout()

plt.savefig('attention_visualization.png') # Save the plot as an image file

plt.close() # Close the plot to free up memory

print("Generated Text:")

print(generated_text)

print("\nAttention visualization saved as 'attention_visualization.png'")Results and Analysis

The text generated by GPT-3.5 using the prompt “The future of AI in healthcare” was analyzed using simulated attention weights.

The heatmap visualization below represents the simulated attention weights, with the intensity of the color indicating the strength of the interaction between the words.

This heatmap provides a visual representation of how different words in the generated text might be related to each other, as interpreted by the simulated attention mechanism. For instance, in a real attention mechanism, we might expect words like “AI,” “healthcare,” and “future” to have stronger connections due to their relevance to the prompt.

While our example uses simulated attention weights, it’s important to understand how this relates to actual model transparency:

- Attention Mechanism in Transformers: In transformer models like GPT-3.5, attention mechanisms allow the model to focus on different parts of the input when generating each word. Real attention weights would show which words the model considers most relevant for each prediction.

- Multi-head Attention: Actual transformer models use multi-head attention, where multiple sets of attention weights capture different types of relationships between words. Our simulation simplifies this to a single attention matrix.

- Layer-wise Attention: Transformer models have multiple layers, each with its own attention patterns. A comprehensive analysis would examine attention across all layers.

- Token Interactions: The heatmap visualizes how tokens (words or subwords) interact. In a real model, this would reveal linguistic patterns and semantic relationships learned by the model.

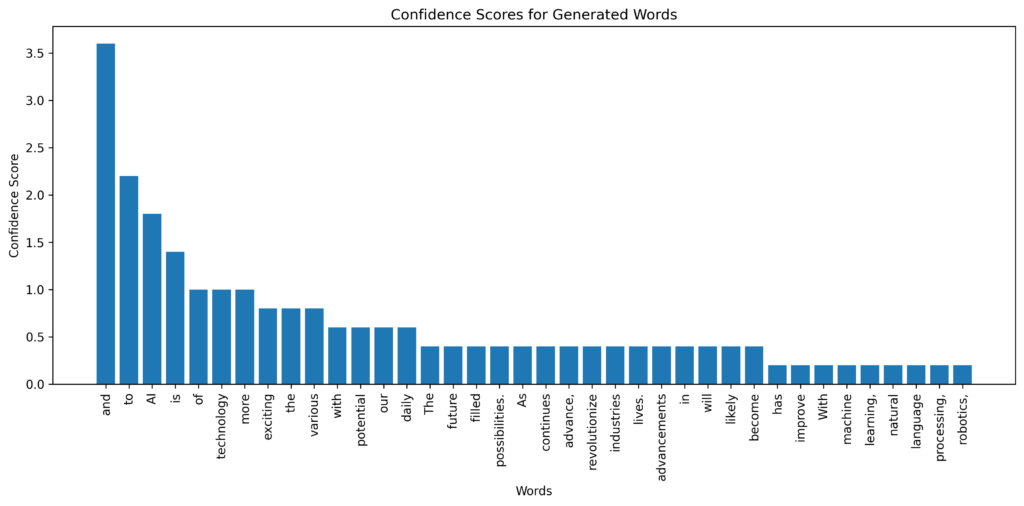

Confidence Approximation in GPT-3.5 Text Generation

I explore the concept of generating text with an approximation of confidence levels using GPT-3.5. Confidence scores can provide insights into the reliability and variability of the words generated by the model, which is particularly useful when working with generative AI.

Methodology

Using GPT-3.5, I generated multiple responses to a given prompt, “The future of AI is,” and analyzed the frequency of each word across the generated texts to approximate confidence levels. The assumption here is that words appearing more frequently in the different responses are more likely to be central or consistent in the model’s understanding of the prompt.

import os

from getpass import getpass

from openai import OpenAI

# Securely get the API key

api_key = os.environ.get("OPENAI_API_KEY")

if api_key is None:

api_key = getpass("Please enter your OpenAI API key: ")

# Initialize OpenAI client

client = OpenAI(api_key=api_key)

def generate_with_confidence_approximation(prompt, max_tokens=50):

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

],

max_tokens=max_tokens,

temperature=0.7,

n=5 # Generate 5 responses to approximate confidence

)

generated_texts = [choice.message.content for choice in response.choices]

# Calculate word frequency across generated texts

word_freq = {}

for text in generated_texts:

for word in text.split():

word_freq[word] = word_freq.get(word, 0) + 1

# Approximate confidence based on word frequency

main_text = generated_texts[0]

result = []

for word in main_text.split():

confidence = word_freq.get(word, 1) / len(generated_texts)

result.append((word, confidence))

return result

# Generate text with confidence approximation

prompt = "The future of AI is"

generated_text = generate_with_confidence_approximation(prompt)

# Print results

print(f"Prompt: {prompt}")

for word, confidence in generated_text:

print(f"{word}: {confidence:.2f}")

import matplotlib.pyplot as plt

# Assuming 'generated_text' is the list of (word, confidence) tuples from the previous output

def save_confidence_plot(generated_text, filename='confidence_scores.png'):

# Sort the words by confidence score in descending order

sorted_words = sorted(generated_text, key=lambda x: x[1], reverse=True)

# Separate words and scores

words, scores = zip(*sorted_words)

# Create a bar plot

plt.figure(figsize=(12, 6))

plt.bar(words, scores)

plt.xticks(rotation=90)

plt.xlabel('Words')

plt.ylabel('Confidence Score')

plt.title('Confidence Scores for Generated Words')

plt.tight_layout()

# Save the plot

plt.savefig(filename, dpi=300, bbox_inches='tight')

plt.close() # Close the figure to free up memory

print(f"Plot saved as {filename}")

# Generate text with confidence approximation

prompt = "The future of AI is"

generated_text = generate_with_confidence_approximation(prompt)

# Print results

print(f"Prompt: {prompt}")

for word, confidence in generated_text:

print(f"{word}: {confidence:.2f}")

# Save the plot

save_confidence_plot(generated_text)Results and Interpretation

The bar chart below represents the confidence scores for each word in the first generated sequence. The higher the score, the more consistently the word appeared across the generated responses, suggesting a higher level of confidence by the model in that word.

In the example above, the word “and” shows the highest confidence score, indicating that it appeared most frequently across the different generated responses. This suggests that the model is more confident in the continuity of ideas connected by “and” in the context of discussing the future of AI. On the other hand, words with lower confidence scores like “robotics” and “natural” appeared less frequently, reflecting the variability in how the model elaborated on different aspects of AI.

- Token Probability Distribution: In actual language models, each token is generated based on a probability distribution over the entire vocabulary. Our confidence scores approximate this by looking at consistency across multiple generations.

- Temperature and Sampling: The ‘temperature’ parameter in our API call affects the randomness of the output. Lower temperatures would likely result in higher confidence scores as the model becomes more deterministic.

- Contextual Confidence: In a fully transparent model, we would see how the confidence of each word prediction changes based on the preceding context.

Limitations of These Approaches

Simulation vs. Reality: Our simulated attention weights don’t reflect the actual internal mechanics of GPT-3.5. They serve as a conceptual tool rather than a true insight into the model’s decision-making process.

Simplified Confidence Metric: Our confidence approximation based on word frequency across multiple generations is a rough estimate and doesn’t capture the nuanced probability distributions in the actual model.

Black Box Nature of GPT-3.5: As an API-based model, GPT-3.5 doesn’t provide access to its internal representations, limiting our ability to truly explain its decisions.

Lack of Fine-grained Control: These methods don’t allow us to influence or adjust the model’s behavior based on our observations, which is a key aspect of true explainability.

Different AI models and tasks require varying approaches to transparency and explainability:

- Computer Vision Models:

Techniques like Grad-CAM (Gradient-weighted Class Activation Mapping) are often used to visualize which parts of an image are most important for classification decisions. - Traditional Machine Learning:

Models like decision trees and linear regressions offer inherent interpretability, with clear relationships between inputs and outputs. - Reinforcement Learning:

Approaches like reward decomposition and state importance visualization help explain agent behavior in complex environments. - Natural Language Processing:

Besides attention visualization, techniques like LIME (Local Interpretable Model-agnostic Explanations) can provide insights into which input words most influenced a particular output.

Future Trends and Challenges

As the field of AI continues to evolve, several trends and challenges in transparency and explainability are emerging:

- Regulatory Pressure: Increasing regulatory focus on AI transparency, such as the EU’s proposed AI Act, will drive innovation in explainable AI techniques.

- Model-Agnostic Explanations: Development of explanation methods that can work across different types of models, enhancing their applicability and comparability.

- Human-Centric Explanations: Moving beyond technical explanations to generate insights that are meaningful and actionable for non-expert users.

- Trade-off Between Performance and Explainability: Balancing the need for highly accurate, complex models with the demand for interpretable systems.

- Dynamic and Interactive Explanations: Tools that allow users to explore model behavior interactively, adjusting inputs and observing changes in real-time.

- Ethical AI Frameworks: Integration of explainability into broader ethical AI frameworks, ensuring transparency is part of responsible AI development from the ground up.

Conclusion

As generative AI becomes increasingly prevalent in our lives, transparency and explainability emerge as crucial elements in fostering trust and ensuring ethical AI development. Our exploration of real-world examples and practical demonstrations has illustrated both the potential and limitations of current approaches to AI transparency.

✅︎ The complexity of explaining advanced AI models requires innovative approaches, as demonstrated by our simulated attention weights and confidence approximations.

✅︎ Different AI domains necessitate tailored explainability methods, from the inherent interpretability of decision trees to complex visualizations for deep neural networks.

✅︎ Future trends point towards more human-centric explanations, model-agnostic techniques, and the integration of explainability into ethical AI frameworks.

✅︎ Challenges remain in balancing model performance with interpretability and developing explanation methods that can keep pace with rapidly advancing AI technologies.